Machine Learing Project: iris

1 minute read

Machine Learing project on iris dataset

Importing Library

# import warnings filter

from warnings import simplefilter

# ignore all future warnings

simplefilter(action='ignore', category=FutureWarning)

import numpy as np

import pandas as pd

import seaborn as sns

sns.set_palette('husl')

import matplotlib.pyplot as plt

%matplotlib inline

from sklearn import metrics

from sklearn.neighbors import KNeighborsClassifier

from sklearn.linear_model import LogisticRegression

from sklearn.model_selection import train_test_split

Reading .csv file

data = pd.read_csv('Iris.csv')

Preview of Data

data['Species'].value_counts()

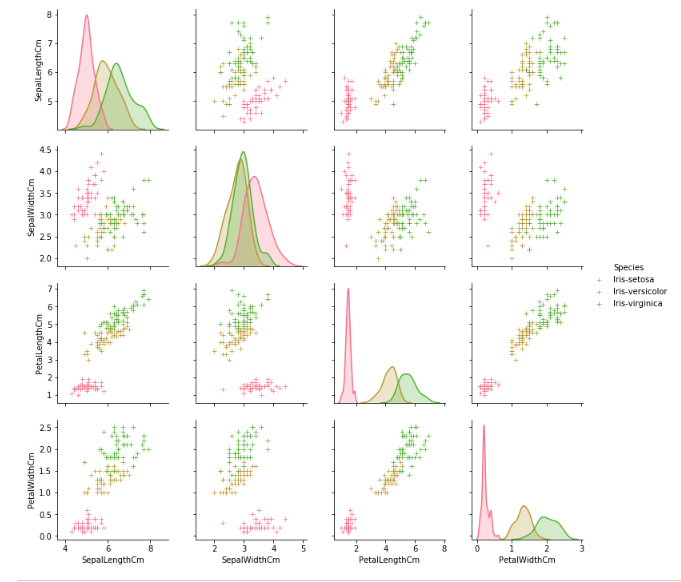

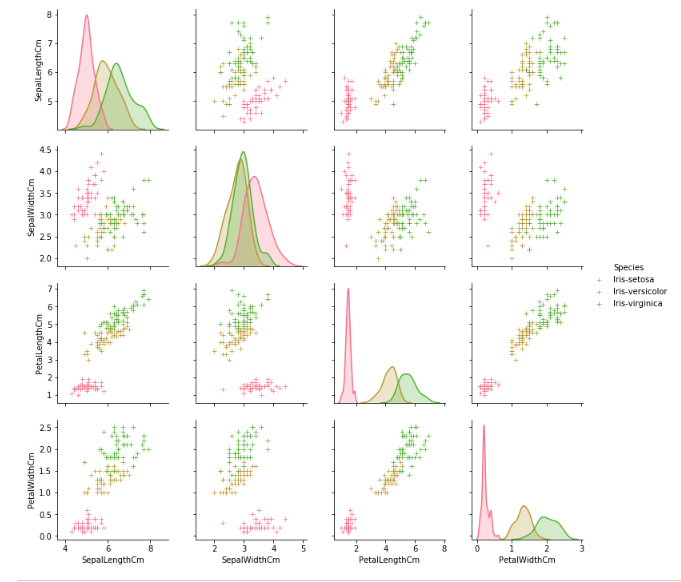

Data Visualization

tmp = data.drop('Id', axis=1)

g = sns.pairplot(tmp, hue='Species', markers='+')

plt.show()

g = sns.violinplot(y='Species', x='SepalLengthCm', data=data, inner='quartile')

plt.show()

g = sns.violinplot(y='Species', x='SepalWidthCm', data=data, inner='quartile')

plt.show()

g = sns.violinplot(y='Species', x='PetalLengthCm', data=data, inner='quartile')

plt.show()

g = sns.violinplot(y='Species', x='PetalWidthCm', data=data, inner='quartile')

plt.show()

Modeling with scikit-learn

X = data.drop(['Id', 'Species'], axis=1)

y = data['Species']

# print(X.head())

print(X.shape)

# print(y.head())

print(y.shape)

y_prediction = model.predict(x_test)

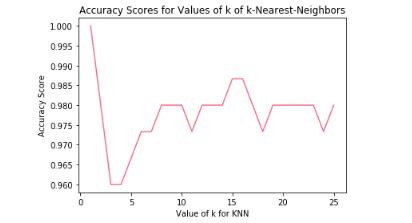

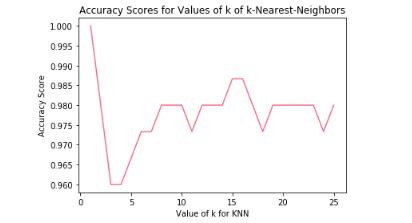

Train and test on the same dataset

# experimenting with different n values

k_range = list(range(1,26))

scores = []

for k in k_range:

knn = KNeighborsClassifier(n_neighbors=k)

knn.fit(X, y)

y_pred = knn.predict(X)

scores.append(metrics.accuracy_score(y, y_pred))

plt.plot(k_range, scores)

plt.xlabel('Value of k for KNN')

plt.ylabel('Accuracy Score')

plt.title('Accuracy Scores for Values of k of k-Nearest-Neighbors')

plt.show()

logreg = LogisticRegression()

logreg.fit(X, y)

y_pred = logreg.predict(X)

print(metrics.accuracy_score(y, y_pred))

### mean_squared_error

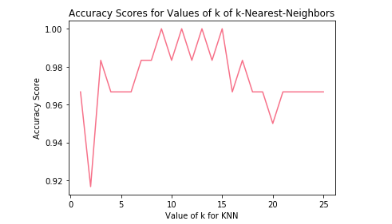

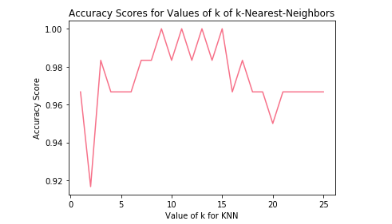

Split the dataset into a training set and a testing set

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.4, random_state=5)

print(X_train.shape)

print(y_train.shape)

print(X_test.shape)

print(y_test.shape)

# experimenting with different n values

k_range = list(range(1,26))

scores = []

for k in k_range:

knn = KNeighborsClassifier(n_neighbors=k)

knn.fit(X_train, y_train)

y_pred = knn.predict(X_test)

scores.append(metrics.accuracy_score(y_test, y_pred))

plt.plot(k_range, scores)

plt.xlabel('Value of k for KNN')

plt.ylabel('Accuracy Score')

plt.title('Accuracy Scores for Values of k of k-Nearest-Neighbors')

plt.show()

accuracy_score

logreg = LogisticRegression()

logreg.fit(X_train, y_train)

y_pred = logreg.predict(X_test)

print(metrics.accuracy_score(y_test, y_pred))

Choosing KNN to Model Iris Species Prediction with k = 12

knn = KNeighborsClassifier(n_neighbors=12)

knn.fit(X, y)

# make a prediction for an example of an out-of-sample observation

knn.predict([[6, 3, 4, 2]])